Author’s Corner: Does AI have Superhuman Reasoning?

Peter Brodeur, Liam McCoy, Adam Rodman

Numerous patients face preventable medical harm, disability, and death as a result of diagnostic errors each year. Thus it is no surprise that attempts to develop clinical decision support (CDS) tools have taken place since the 1950s.1 Early endeavors in the 1960s were applied to single disease categories or chief complaints such as abdominal pain.2,3 However, shortly thereafter came the advent of systems which generated differential diagnoses – most famously the general internal medicine system of “INTERNIST-1”, developed in the 1980s. INTERNIST-1 was shown to perform equally to hospitalists but worse than subspecialists on New England Journal of Medicine clinicopathological conference cases.4 Systems were further developed such as the next iteration of INTERNIST-1 known as Quick Medical Reference (QMR) to allow physicians access to the knowledge bank from which that output was generated to augment the diagnostic process.5 Thus the focus shifted from purely differential diagnosis generation to useful diagnostic aids in the reasoning process.

However, in the late 1980s and 1990s, several critiques emerged regarding these models, such as cumbersome input language, long times of use, and a lack of evidence showing improved patient outcomes.6,7 This resulted in the disinvestment in CDS tools leading to the so-called “AI Winter”, alongside similar disillusionment in other AI subfields. A notable exception in the 2000s was ISABEL which was the first model to make use of natural language processing as well as allowed the user to copy and paste input data. Additionally, ISABEL displayed impressive diagnostic capabilities such as reaching greater than 95% accuracy on NEJM CPCs.8

Fast forward to 2022 and large language models (LLMs) have been able to pass the United States Medical Licensing Exam, a feat previously thought to be unique to human cognition.9,10 Furthermore, in prior studies led by the ARISE Network, GPT4 has shown to outperform physicians in clinical reasoning documentation,11 management reasoning tasks,12 and probabilistic reasoning 13 while performing comparably on diagnostic reasoning tasks.14

The Age of Reasoning Models

But what if these systems could seemingly think? That is precisely what reasoning models promise to deliver. In September 2024, Open AI released its first reasoning model o1-preview in which the model runs through a chain of thought at the time of prompting creating a simulacrum of human thought. This baked-in chain of thought approach showed superior performance on mathematics and engineering problems.15

How the study tested o1-preview

We ran o1-preview through the same methodology as the four aforementioned ARISE Network landmark analyses.11–14 In addition, we evaluated its diagnostic accuracy on the NEJM CPCs compared to historical CDS data as well as its diagnostic performance compared to internal medicine physicians on real emergency department to hospital admission cases from Beth Israel Deaconess Medical Center (BIDMC). For the emergency department cases, unstructured data from three time points – triage, at the end of the ED encounter, and at the end of the internal medicine H&P – were directly inputted into the context window from the medical record.

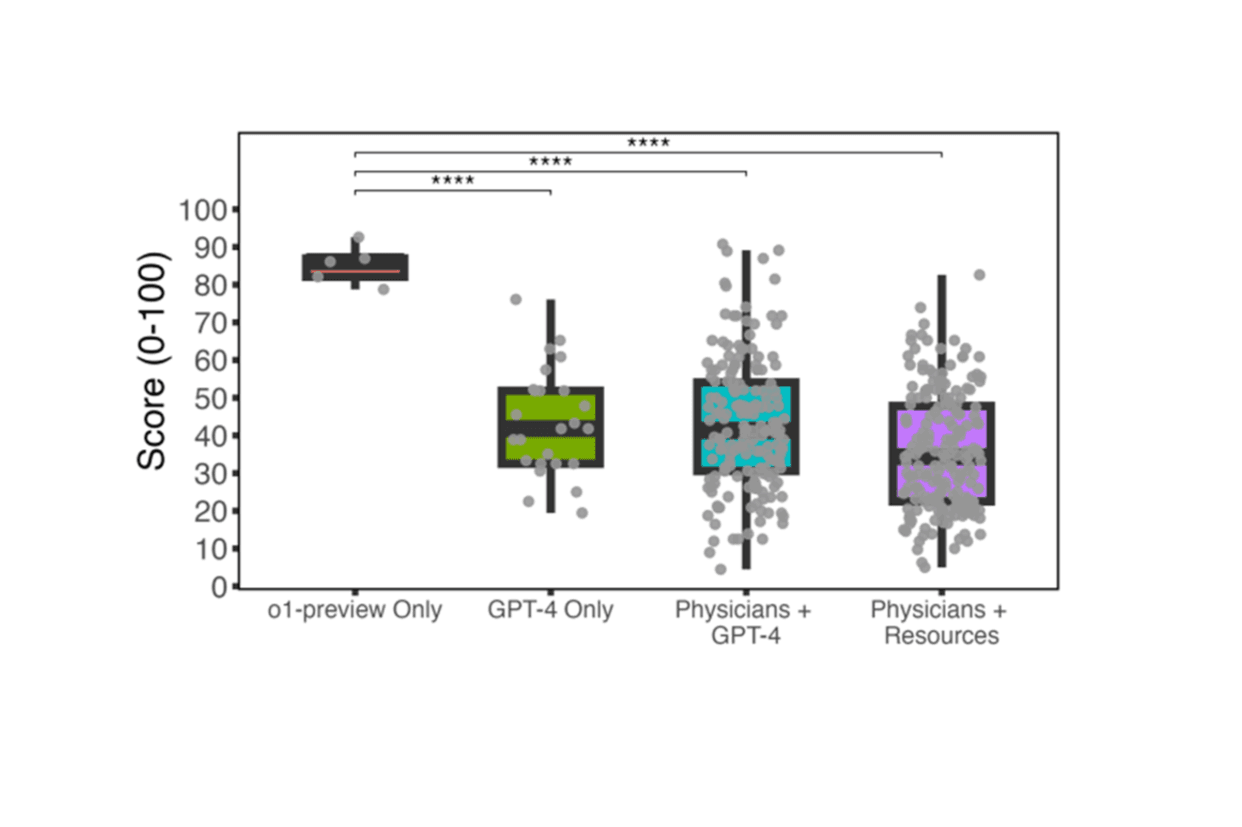

Figure A. Boxplot of o1-preview management reasoning scores compared to GPT-4 and physicians on Grey Matters management cases. Five cases were included. We generated one o1-preview response for each case. The prior study collected five GPT-4 responses to each case, 176 responses from physicians with access to GPT-4, and 199 responses from physicians with access to conventional resources.12 *: p <= 0.05, **: p <= 0.01, ***: p <= 0.001, ****: p <= 0.0001.

What we learned

We found that o1-preview performed similarly if not better than historical models such as GPT4 and ISABEL on diagnosis of NEJM CPCs. The model alone also performed significantly better on management reasoning tasks compared to physicians with GPT4 (Figure A) as well as outpaced GPT4, attending and resident physicians on clinical reasoning documentation. For probabilistic reasoning and diagnostic reasoning tasks, o1-preview alone performed similarly. Regarding the real emergency department cases from BIDMC, o1-preview again exhibited superhuman diagnostic performance at all time points analyzed (Figure B).

Figure B. Blinded assessment of AI and human expert second opinions on real emergency room cases. Bar plot comparing two internal medicine attending physicians, o1, and GPT-4o diagnostic performance on 79 clinical cases at three diagnostic touchpoints (triage in the emergency room, initial evaluation by a physician, and admission to the hospital or intensive care unit). Differential diagnoses were capped at 5 diagnoses for all participants. The source of the differential diagnosis was blinded and scored by two separate attending internal medicine physicians using the Bond scale. The proportion of responses scored 4 or 5 are shown, indicating a response that contains something exact or very close to the true diagnosis.

What’s next?

LLMs have shown to have some superhuman reasoning capabilities in certain contexts, yet in other contexts still perform worse than experienced clinicians.16 Given the promise of However, prospective clinical trials in real-world patient care settings are urgently needed, as these models likely hold potential for improving patient outcomes. With implementation of these models seemingly inevitable in some capacity, health systems must prepare the necessary monitoring, evaluation, and computational infrastructures required for safe and effective deployment.

References:

1. Ledley, R. S. & Lusted, L. B. Reasoning foundations of medical diagnosis; symbolic logic, probability, and value theory aid our understanding of how physicians reason. Science 130, 9–21 (1959).

2. Warner, H. R., Toronto, A. F., Veasey, L. G. & Stephenson, R. A Mathematical Approach to Medical Diagnosis: Application to Congenital Heart Disease. JAMA 177, 177–183 (1961).

3. De Dombal, F. T., Leaper, D. J., Horrocks, J. C., Staniland, J. R. & McCann, A. P. Human and computer-aided diagnosis of abdominal pain: further report with emphasis on performance of clinicians. Br. Med. J. 1, 376–380 (1974).

4. Miller, R. A., Pople, H. E. & Myers, J. D. Internist-1, an experimental computer-based diagnostic consultant for general internal medicine. N. Engl. J. Med. 307, 468–476 (1982).

5. Miller, R. A., McNeil, M. A., Challinor, S. M., Masarie, F. E. & Myers, J. D. The INTERNIST-1/QUICK MEDICAL REFERENCE Project—Status Report. West. J. Med. 145, 816–822 (1986).

6. Kassirer, J. P. A report card on computer-assisted diagnosis–the grade: C. N. Engl. J. Med. 330, 1824–1825 (1994).

7. Miller, R. A. & Masarie, F. E. The demise of the ‘Greek Oracle’ model for medical diagnostic systems. Methods Inf. Med. 29, 1–2 (1990).

8. Graber, M. L. & Mathew, A. Performance of a Web-Based Clinical Diagnosis Support System for Internists. J. Gen. Intern. Med. 23, 37–40 (2008).

9. Singhal, K. et al. Large language models encode clinical knowledge. Nature 620, 172–180 (2023).

10. Kung, T. H. et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit. Health 2, e0000198 (2023).

11. Cabral, S. et al. Clinical Reasoning of a Generative Artificial Intelligence Model Compared With Physicians. JAMA Intern. Med. 184, 581–583 (2024).

12. Goh, E. et al. GPT-4 assistance for improvement of physician performance on patient care tasks: a randomized controlled trial. Nat. Med. 31, 1233–1238 (2025).

13. Rodman, A., Buckley, T. A., Manrai, A. K. & Morgan, D. J. Artificial Intelligence vs Clinician Performance in Estimating Probabilities of Diagnoses Before and After Testing. JAMA Netw. Open 6, e2347075 (2023).

14. Goh, E. et al. Large Language Model Influence on Diagnostic Reasoning: A Randomized Clinical Trial. JAMA Netw. Open 7, e2440969 (2024).

15. OpenAI et al. OpenAI o1 System Card. Preprint at https://doi.org/10.48550/arXiv.2412.16720 (2024).

16. McCoy, L. G. et al. Assessment of Large Language Models in Clinical Reasoning: A Novel Benchmarking Study. NEJM AI 2, AIdbp2500120 (2025).